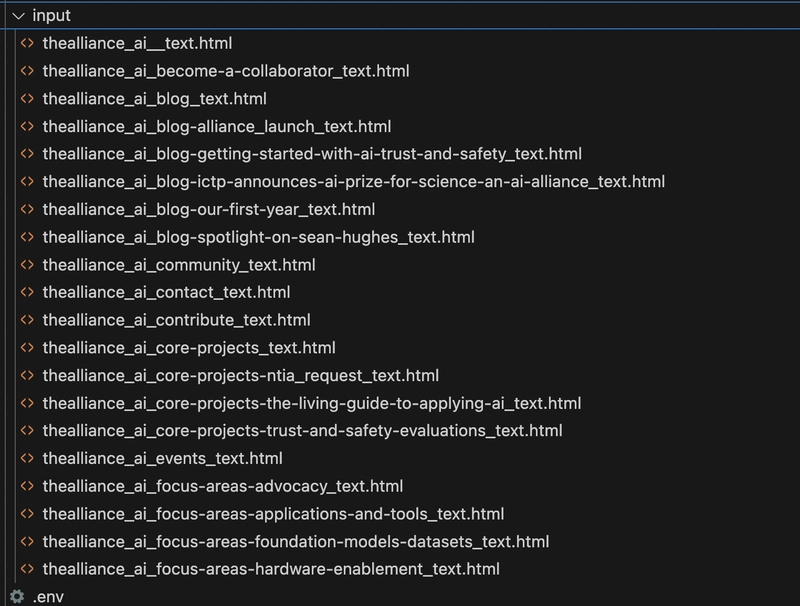

A hands-on exercise using “data-prep-kit” and storing the result as parquet files.

Introduction

Alongside with Docling, “IBM Research” open-sourced another sets of tool which could be very useful in the context of data preparation for LLMs ar AI Agents. The tool is “Data Prep Kit” and is available on public Github repository.

Features of Data-Prep-Kit

- The kit provides a growing set of modules/transforms targeting laptop-scale to datacenter-scale processing.

- The data modalities supported today are: Natural Language and Code.

- The modules are built on common frameworks for Python, Ray and Spark runtimes for scaling up data processing.

- The kit provides a framework for developing custom transforms for processing parquet files.

- The kit uses Kubeflow Pipelines-based workflow automation.

What is data-prep-toolkit good for?

The kit is very useful to develop custom transforms and work with “parquet” files.

Parquet files, as explained on the official homepage from Apache foundation is “ an open source, column-oriented data file format designed for efficient data storage and retrieval. It provides high performance compression and encoding schemes to handle complex data in bulk and is supported in many programming language and analytics tools”.

[Image from https://parquet.apache.org/]

Parquet files in some use-cases are better than .CSV files; unlike CSV, which stores data in rows, Parquet organizes data by columns. This columnar storage format allows for more efficient querying and compression, making it ideal for large-scale datasets. Parquet is also more suitable for distributed systems, while CSV is better suited for simpler, smaller datasets.

OK, after all the explanation, let’s try a sample out-of-the-box code from the data-prep-kit repository.

Sample web crawler code

Web crawling using Python is not a new subject and there are some extremely good frameworks which could be used, however this toolkit prepares and simplifies the future usage of the result for LLMs…

First, we need to prepare the environment (all documented and all worked perfectly for me).

conda create -n dpk-html-processing-py311 python=3.11

conda activate dpk-html-processing-py311

conda activate dpk-html-processing-py311

The next step is to run the “pip install -r requirements.txt”

### DPK 1.0.0a4

data-prep-toolkit==0.2.3

data-prep-toolkit-transforms[html2parquet]==1.0.0a4

data-prep-toolkit-transforms[web2parquet]==1.0.0a4

## HTML processing

trafilatura

# Milvus

pymilvus

pymilvus[model]

## --- Replicate

replicate

## --- llama-index

llama-index

llama-index-embeddings-huggingface

llama-index-llms-replicate

llama-index-vector-stores-milvus

## utils

humanfriendly

pandas

mimetypes-magic

nest-asyncio

## jupyter and friends

jupyterlab

ipykernel

ipywidgets

I use Jupyter Lab in order to run notebooks locally on my laptop.

You need to have two provided sample files with the required configurations; 1) my_config.py and 2) my_utils.py.

# Crawl a website

We will use `DPK-Connector` module

References

- https://github.com/data-prep-kit/data-prep-kit/tree/dev/data-connector-lib

## Step-1: Config

from my_config import MY_CONFIG

## Step-2: Setup Directories

import os, sys

import shutil

if not os.path.exists(MY_CONFIG.INPUT_DIR ):

shutil.os.makedirs(MY_CONFIG.INPUT_DIR, exist_ok=True)

## clear input / output folder

shutil.rmtree(MY_CONFIG.INPUT_DIR, ignore_errors=True)

shutil.os.makedirs(MY_CONFIG.INPUT_DIR, exist_ok=True)

print ("✅ Cleared download directory")

## Step-3: Crawl the website

We will use `dpk-connector` utility to download a some HTML pages from a site

**Parameters**

For configuring the crawl, users need to specify the following parameters:

| parameter:type | Description |

| --- | --- |

| urls:list | list of seed URLs (i.e., ['https://thealliance.ai'] or ['https://www.apache.org/projects','https://www.apache.org/foundation']). The list can include any number of valid URLS that are not configured to block web crawlers |

|depth:int | control crawling depth |

| downloads:int | number of downloads that are stored to the download folder. Since the crawler operations happen asynchronously, the process can result in any 10 of the visited URLs being retrieved (i.e. consecutive runs can result in different files being downloaded) |

| folder:str | folder where downloaded files are stored. If the folder is not empty, new files are added or replace the existing ones with the same URLs |

%%capture

## must enable nested asynchronous io in a notebook as the crawler uses

## coroutine to speed up acquisition and downloads

import nest_asyncio

nest_asyncio.apply()

from dpk_web2parquet.transform import Web2Parquet

Web2Parquet(urls= [MY_CONFIG.CRAWL_URL_BASE],

depth=MY_CONFIG.CRAWL_MAX_DEPTH,

downloads=MY_CONFIG.CRAWL_MAX_DOWNLOADS,

folder=MY_CONFIG.INPUT_DIR).transform()

# list some files

print (f"✅ web crawl completed. Downloaded {len(os.listdir(MY_CONFIG.INPUT_DIR))} files into '{MY_CONFIG.INPUT_DIR}' directory", )

# for item in os.listdir(MY_CONFIG.INPUT_DIR):

# print(item)

And as we can see, the application gave the result as expected.

Conclusion… and next steps

The data-prep-kit on GitHub is designed to streamline and simplify the often complex process of preparing data for analysis and machine learning. It offers a collection of modular and reusable tools. By providing these functionalities in a cohesive and well-structured framework, the toolkit aims to significantly reduce the manual effort and coding required for data preparation, enabling users to more efficiently get their data into a usable and high-quality state for downstream tasks.

As for my next step(s)… trying other features of the toolkit ✌️

Links

- Data prep kit github repository: https://github.com/data-prep-kit/data-prep-kit?tab=readme-ov-file

- Quick start guide: https://github.com/data-prep-kit/data-prep-kit/blob/dev/doc/quick-start/contribute-your-own-transform.md

- Provided samples and examples: [https://github.com/data-prep-kit/data-prep-kit/tree/dev/examples

- Parquet: https://parquet.apache.org/](https://github.com/data-prep-kit/data-prep-kit/tree/dev/examples

- Parquet: https://parquet.apache.org/)