APIs are the backbone of modern applications, handling thousands (or even millions) of requests daily. But as traffic grows, a single server can quickly become overwhelmed, leading to slow responses, timeouts, crashes and ultimately loss in business revenue.

This is where load balancing comes in. Load balancers are capable of distributing traffic across multiple servers, ensuring your API remains fast, scalable, and highly available. This tutorial is part of a series on Optimising api performance. You can read part 1 of this series on Caching here

In this guide, we’ll explore:

- When you should consider load balancing in your application

- How load balancing works

- Load balancing strategies

- Real-World Case Study: PayU

- Common load balancing issues and fixes

- Best practices

- Conclusion & Resources

1. When Should I consider Load Balancing?

Not every API needs load balancing from day one. But you should strongly consider it if:

- Your API receives 1,000+ requests per second.

- Your server CPU usage consistently exceeds 70%.

- You need high availability (e.g., financial transactions, streaming)

- You’ve experienced downtime due to traffic spikes - Those graphs are telling you something

- Reliability - Robust Load balancer like Elastic load balancer are capable of performing health checks on upstreams servers before distributing requests - helping maximise performance and resiliency.

- You need an added layer of Security - Load balancers are capable of playing crucial role in security. They are capable of acting as a buffer against DDoS attacks, and enabling features like SSL encryption and WAF integration.

- Likewise, Load balancers can be instructed to drop requests from suspicious source. Ensuring these malicious requests do not reach your core application resources.

If any of these apply to your system, it’s time to implement a load balancer.

2. How Load Balancing Works

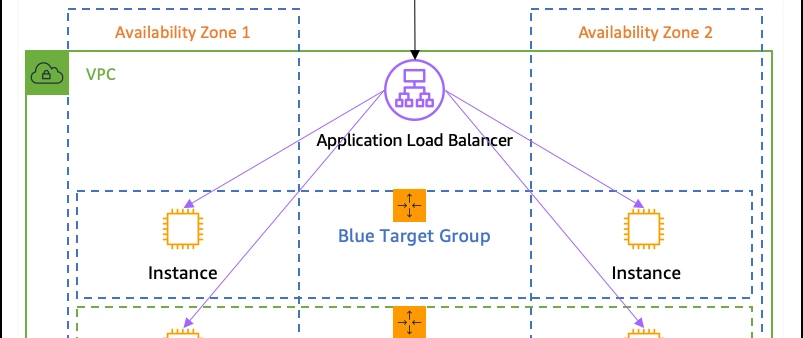

A load balancer acts as a middle layer between clients and backend servers. It distributes incoming networks and or application requests across multiple servers or "targets" based on predefined rules.

Load balancer prevents overload and improve performance, availability, and scalability of an application. Load balancer acts as a single point of contact for clients, routing requests to healthy servers and monitoring their health.

Example:

- Without load balancing: Requests go to one server, which can get overloaded.

- With load balancing: Requests are spread across multiple servers, improving speed and reliability and make it easy to scale.

Here’s a basic visualisation of how it works:

Client Requests ---> Load Balancer ---> Server 1

---> Server 2

---> Server 3

- Nginx as a Load Balancer *

http {

upstream backend_servers {

server api-server-1.local;

server api-server-2.local;

}

server {

listen 80;

location / {

proxy_pass http://backend_servers;

}

}

}

This setup distributes requests between api-server-1.local and api-server-2.local.

3. Load Balancing Strategies

Load balancing strategies are methods or techniques load balancer uses in distributing network or application traffic across multiple targets. This strategy defines the rules to optimize resource utilisation, improve performance, and enhance reliability of the system as a whole. We will explore few of the common strategies below.

1. Round Robin (Default & Simple)

Distributes requests evenly across all servers.

Best for: APIs with similar server capacities.

Nginx illustration:

upstream backend {

server api1.example.com;

server api2.example.com;

}

2. Least Connections (Great for Heavy Requests)

Sends traffic to the server with the fewest active connections.

Best for: APIs with long-running or high-latency requests.

Nginx illustration:

upstream backend {

least_conn;

server api1.example.com;

server api2.example.com;

}

3. IP Hash (Good for Session Persistence)

Assigns each client’s request to the same server based on their IP.

Best for: APIs that need session consistency (e.g. authentication, sticky sessions).

Nginx illustration:

upstream backend {

ip_hash;

server api1.example.com;

server api2.example.com;

}

4. Weighted Load Balancing (Fine-Tuned Traffic Control)

Some servers are more powerful than others—this method sends more traffic to stronger servers.

Best for: APIs running on mixed-capacity servers.

Example:

upstream backend {

server api1.example.com weight=3;

server api2.example.com weight=1;

}

Here:

- api1.example.com gets 3x more traffic than api2.example.com.

4. Real-World Case Study: PayU

PayU one of India's leading payments and fintech companies *serves over 450,000 merchants using over 100+ payment methods * and cannot afford API slowdowns. PayU uses application load balancers to scale their service 10x to meet demands during peak periods.

5. Common Load Balancing Issues & Fixes

| Issue | Fix |

|---|---|

| Some servers get more traffic than others | Use Least Connections instead of Round-Robin. |

| High latency even with load balancing | Enable Geo-DNS to route users to nearby servers. |

| Users randomly get logged out | Use session persistence (sticky sessions). |

| Unhealthy target servers | Implement health checks and use auto scaling groups to ensure new servers are spin up when one or more servers becomes unhealthy. |

6. Best Practices for Load Balancing APIs

- Choose the right strategy – Round Robin is simple, but Least Connections or Weighted Load Balancing may be better.

- Enable health checks – Automatically remove failing servers from the pool.

- Use cache alongside load balancing – Combining both boosts performance further.

- Monitor and tweak – Use tools like Prometheus & Grafana to track load balancer health.

7. Conclusion & Resources

Load balancing is essential for scaling APIs and ensuring high availability. Whether you’re handling a small SaaS app or a global platform like PayU, the right load balancing strategy prevents slowdowns, reduces costs, and improves user experience.

Continue with the 3rd part of this series Asynchronous Processing & Queues here

Well explained. 👏