🧵 Fixing Common Issues When Installing llama-cpp-python on Windows

If you're working with LLMs and trying out llama-cpp-python, you might run into some frustrating issues on Windows — especially when installing or importing the package.

I recently ran into both build errors during installation and runtime errors related to missing DLLs. In this post, I’ll walk through the exact problems I faced, and how I fixed them — hopefully saving you some hours of debugging.

🔧 Problem 1: Build Failure During pip install

If you're installing the llama-cpp-python package from source (or using a wheel that requires CMake), and you're on Windows, you might see errors like:

× Building wheel for llama-cpp-python (pyproject.toml) did not run successfully.

│ exit code: 1

╰─> [20 lines of output]

*** scikit-build-core 0.11.1 using CMake 4.0.1 (wheel)

*** Configuring CMake...

scikit_build_core - WARNING - Can't find a Python library, got libdir=None, ldlibrary=None, multiarch=None, masd=None

-- Building for: Visual Studio 17 2022

-- The C compiler identification is unknown

-- The CXX compiler identification is unknown

CMake Error at CMakeLists.txt:3 (project):

No CMAKE_C_COMPILER could be found.

CMake Error at CMakeLists.txt:3 (project):

No CMAKE_CXX_COMPILER could be found.

-- Configuring incomplete, errors occurred!

*** CMake configuration failed

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

ERROR: Failed building wheel for llama-cpp-python

Failed to build llama-cpp-python

ERROR: Failed to build installable wheels for some pyproject.toml based projects (llama-cpp-python)

Here's a StackOverflow thread error-while-installing-python-package-llama-cpp-python and the-cxx-compiler-identification-is-unknown that matches the error I encountered.

Solution: Install Required Build Tools

You can use the Visual Studio Installer to make this easy. Make sure to install the following components:

- Windows 10 SDK (version 10.0.x)

- C++ CMake tools for Windows

- MSVC v14.x C++ build tools

Additionally, some setups may require MinGW (Minimalist GNU for Windows) to provide the necessary compilers (gcc, g++):

Make sure to:

- Add the MinGW

bin/folder to your systemPATH. - Verify installation by running:

gcc --version

g++ --version

Once those are installed, try running the install again:

Note: Use

--no-cache-dirand--force-reinstallif you want to force a fresh build:

pip install llama-cpp-python --extra-index-url https://abetlen.github.io/llama-cpp-python/whl/cpu --no-cache-dir

🧩 Problem 2: Runtime Error When Importing llama_cpp

After installation, I ran into this error on import:

Failed to load shared library '.../llama_cpp/lib/llama.dll':

Could not find module 'llama.dll' (or one of its dependencies).

Try using the full path with constructor syntax.

This occurred even after using the prebuilt CPU-only wheel.

Root Cause

https://github.com/abetlen/llama-cpp-python/issues/1993

Optional Fix: Comment RTLD_GLOBAL on Windows

If you’re still getting import errors after the above fixes, one workaround is to modify the source code of the installed package.

In the file Lib/site-packages/llama_cpp/_ctypes_extensions.py. Comment out the following line:

# cdll_args["winmode"] = ctypes.RTLD_GLOBAL

This resolved an edge-case import issue for me specific to Windows and certain Python builds.

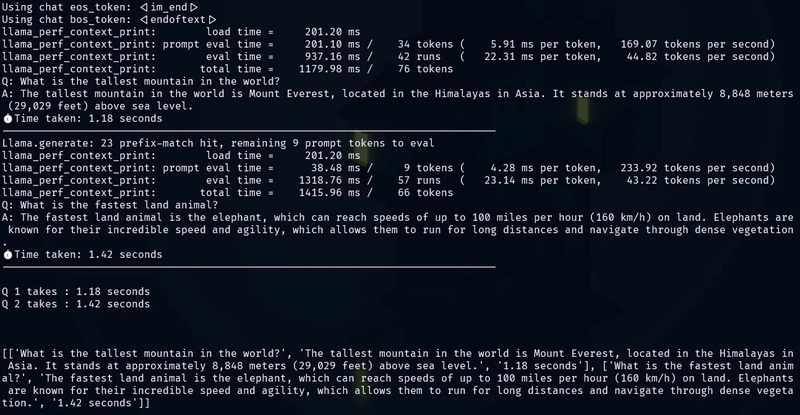

The Code i use to run the model:

from llama_cpp import Llama

import time

llm = Llama(

model_path="qwen2-0_5b-instruct-q4_k_m.gguf",

n_ctx=1024, # depending on your model and hardware

n_threads=2, # adjust to your CPU threads

)

questions = [

"What is the tallest mountain in the world?",

"What is the fastest land animal?",

]

qa = []

for question in questions:

start_time = time.time()

res = llm.create_chat_completion(

messages=[

{"role": "system", "content": "You are an assistant who perfectly describes in a professional way."},

{"role": "user", "content": question}

],

max_tokens=100

)

end_time = time.time()

duration = end_time - start_time

print("Q:", question)

print("A:", res["choices"][0]["message"]["content"])

print(f"⏱ Time taken: {duration:.2f} seconds")

print("-" * 80)

qa.append([

question,

res["choices"][0]["message"]["content"],

f"{duration:.2f} seconds"

])

print()

for i, t in enumerate(qa):

print(f"Q {i + 1} takes : {t[2]}")