You got your hands on some data that was leaked from a social network and you want to help the poor people.

Luckily you know a government service to automatically block a list of credit cards.

The service is a little old school though and you have to upload a CSV file in the exact format. The upload fails if the CSV file contains invalid data.

The CSV files should have two columns, Name and Credit Card. Also, it must be named after the following pattern:

YYYYMMDD.csv.

The leaked data doesn't have credit card details for every user and you need to pick only the affected users.

The data was published here:

You don't have much time to act.

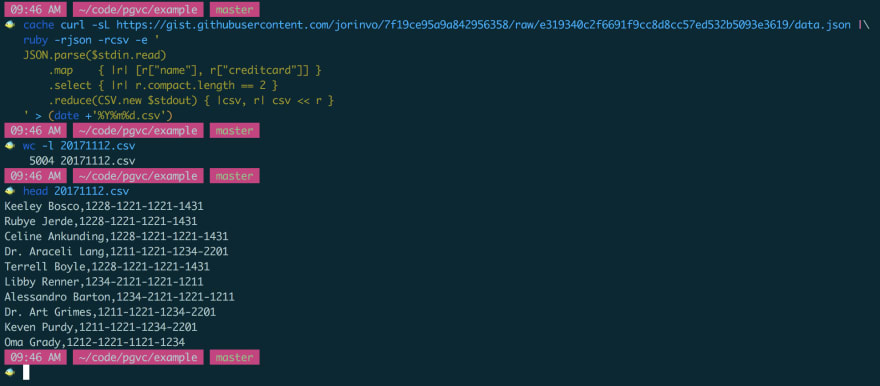

What tools would you use to get the data, format it correctly and save it in the CSV file?

Do you have a crazy vim configuration that allows you to do all of this inside your editor? Are you a shell power user and write this as a one-liner? How would you solve this in your favorite programming language?

Show your solution in the comments below!

PowerShell to the rescue!

$json = invoke-webrequest 'gist.githubusercontent.com/jorinvo...' | convertfrom-json

$json | select name,creditcard | export-csv "$(get-date -format yyyyMMdd).csv" -NoTypeInformation