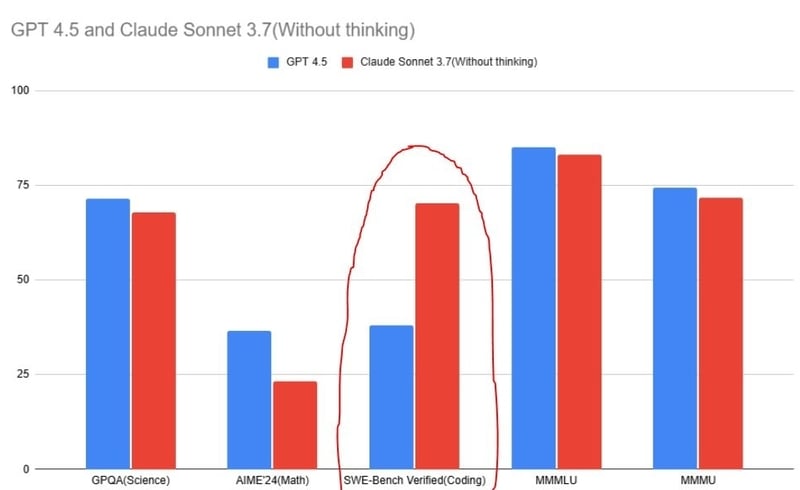

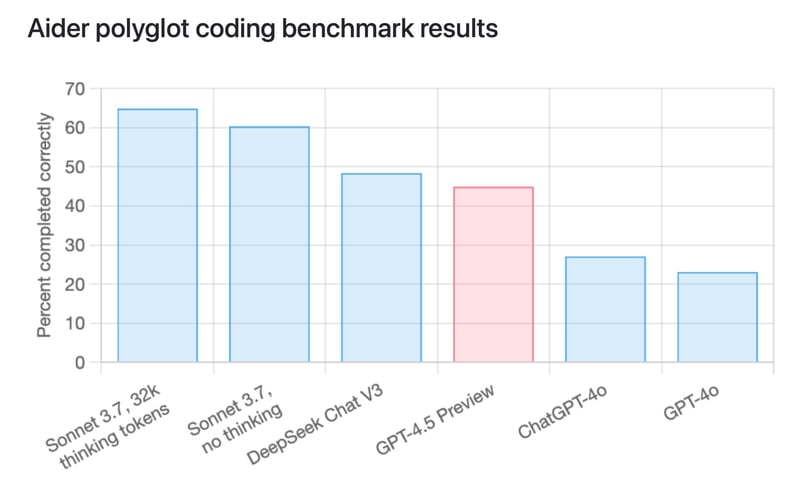

It is said that Claude 3.7 completely crushes our newest and costliest OpenAI model, GPT-4.5. But hey, I don't trust these benchmarks until I test them myself.

So, I ran my own tests on three Web Development coding questions.

Let's see how these two models compare against each other in coding. 🤨

TL;DR

If you want to skip straight to the result, Claude 3.7 Sonnet dominates GPT-4.5 in coding. GPT-4.5 is not even close (kinda sucks!) even after being about 10x costlier than Claude 3.7 Sonnet.

And yeah, that’s fair. Claude 3.7 Sonnet is built for coding, while GPT-4.5 is mainly for writing and designing.

I've recently dropped a coding comparison post on Claude 3.7 vs. Grok 3 vs. OpenAI o3-mini-high. If you're interested in how Claude 3.7 performed here, check it out. 👇

Claude 3.7 Sonnet vs. Grok 3 vs. o3-mini-high: Coding comparison

Shrijal Acharya for Composio ・ Feb 27

Brief on the GPT-4.5 Model

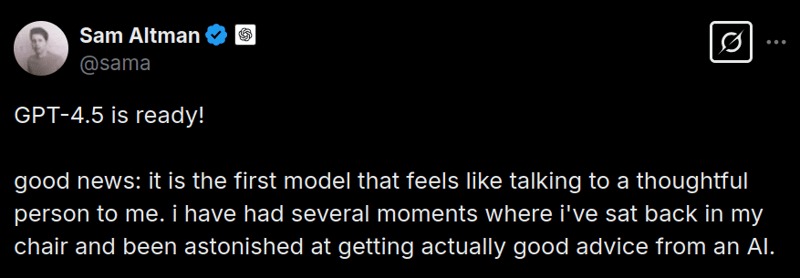

OpenAI on Thursday released an early version of GPT-4.5, a new version of its flagship large language model. The team claims it to be the "biggest and their best model," which feels like talking to a native human.

And NO, this is not a reasoning model, as stated by OpenAI CEO Sam Altman himself.

This seems to be true, as compared to other models like Claude 3.7 Sonnet and the earlier GPT-4o models on coding, the percentage accuracy appears to be significantly lower.

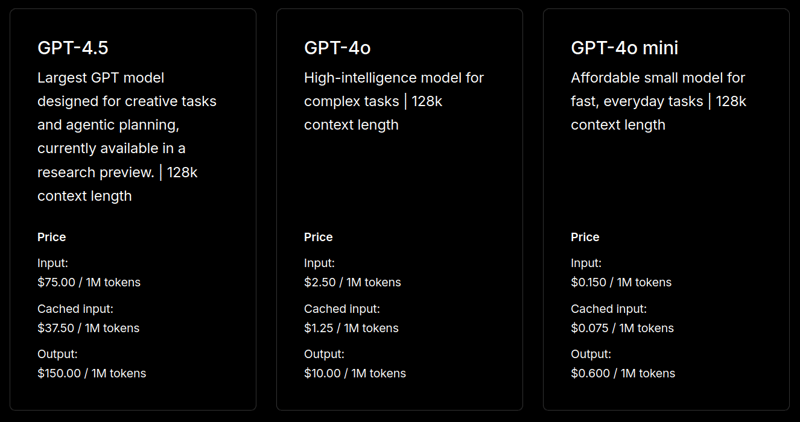

When it comes to pricing, this is OpenAI's most expensive AI model, with $75 per million input token and $150 per million output token. 😮💨 You can compare the pricing of this model to some of their earlier models side by side:

Currently, people with a $200-a-month ChatGPT Pro account can try out GPT-4.5 today. OpenAI says it will begin rolling out to Plus users next week.

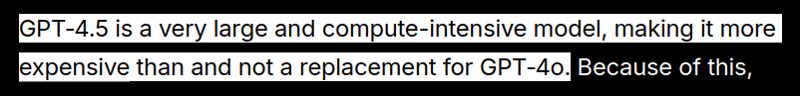

OpenAI did not disclose the size of their new model, but they mentioned that the scale increase from GPT-4o to GPT-4.5 is similar to the jump from GPT-3.5 to GPT-4o.

What makes it super expensive?

Unlike other reasoning models like o1 and o3-mini, which work through the answer step by step, normal large language models like GPT-4.5 spit out the first response they come up with.

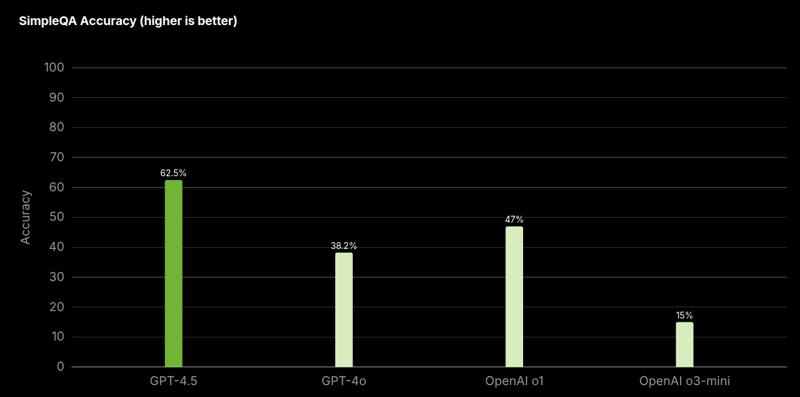

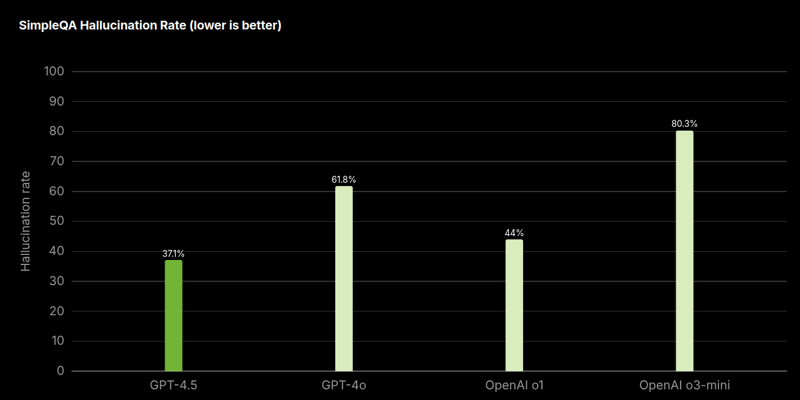

In a general-knowledge quiz developed by OpenAI last year called SimpleQA, which includes questions on everything, models like GPT-4o scored 38.2%, o3-mini scored 15%, while GPT-4.5 scored a whopping 62.5%. 🤯

OpenAI claims that GPT-4.5 comes up with far fewer made-up answers, which is also referred to as hallucination in AI terms.

Along with that, it has enhanced contextual knowledge and writing skills, which is the main reason why the model's output sounds more natural with less unnecessary reasoning.

In the same test conducted, the GPT-4.5 model came up with made-up answers 37.1% of the time, compared with 61.8% for GPT-4o and 80.3% for o3-mini.

Coding Comparison

💁 As I've said it earlier, we will mainly be comparing the two models on frontend questions.

1. Masonry Grid Image Gallery

Prompt: Build a Next.js image gallery with a masonry grid, infinite scrolling, and a search bar for filtering images by keywords. Style it like Unsplash with a clean, modern UI. Optimize image loading. Place all code in

page.tsx.

Response from Claude 3.7 Sonnet

You can find the code it generated here: Link

Here's the output of the program:

The output from Claude is pure insanity. Everything is just so perfectly implemented.

I could only notice one small issue, and that is that the footer does not stick to the bottom.

Response from GPT-4.5

You can find the code it generated here: Link

Here's the output of the program:

The output from GPT-4.5 is not what I expected. I mean, it's kind of smart that it didn't use any npm modules like @tanstack/react-query, but clearly, the Masonry Grid layout is missing, and the way infinite scrolling is implemented feels a bit more DIY.

Can't complain much, but it is no way near the Claude 3.7 generated code.

Final Verdict: No doubt, the Claude 3.7 Sonnet output is far superior. ✅ It has implemented everything correctly, from the Masonry Grid layout to perfect infinite scrolling using the @tanstack/react-query library. There is still a lot missing in the GPT-4.5 output.

2. Typing Speed Test

Let's test both models by asking them to build a Typing Speed Test app similar to Monkeytype. And not to mention, I can get to flex my typing speed. 😉 (just kidding)

Prompt: Build a Next.js basic typing test app. Users type a given sentence, with mistakes highlighted in red, allowing corrections. Display real-time typing speed, both raw (with mistakes) and adjusted (without mistakes). Once the user types to the end, the test should be over. Place all code in

page.tsx.

Response from Claude 3.7 Sonnet

You can find the code it generated here: Link

Here's the output of the program:

WOAH, it just feels illegal to use this model for coding. How good is this? I have no words to say. 🤯

In no time, with everything implemented correctly, it built this entire typing test site with more than what I asked. It even added the accuracy display as well.

Response from GPT-4.5

You can find the code it generated here: Link

Here's the output of the program:

GPT-4.5 got this one correct as well, but there's one small issue with the code it generated. Once the user reaches the end, the test is supposed to end, but it doesn't unless the user goes back and fixes it.

Final Verdict: There's one minor issue with the generated code response from GPT-4.5, but fair to say both models got it correct. ✅

3. Collaborative Real-time Whiteboard

💁 This one's pretty tough, and I am not sure if Claude 3.7 will also get this correct. It requires setting up a separate web-socket server and listening on the connections.

Prompt: Build a real-time collaborative whiteboard in Next.js with Tailwind for styling. Multiple users should be able to draw and see updates instantly. But, when a user clears their canvas, the other user's canvas should not be cleared.

Response from Claude 3.7 Sonnet

You can find the code it generated here: Link

Here's the output of the program:

Okay, so now I see some junior developers getting replaced by AI pretty soon. 🤐

For me, it would take pretty long to code this up. I am starting to see why this model is called a beast when it comes to coding. Just perfection!

Response from GPT-4.5

You can find the code it generated here: Link

Here's the output of the program:

GPT-4.5 failed badly here. The websocket connection was established, but there was an issue parsing the data received from the websocket connection on the client.

Final Verdict: Claude 3.7 Sonnet just crushed this one as well. 🔥 The code it generated is perfect, and the output is exactly how I wanted. GPT-4.5 was able to establish the websocket connection but had an issue parsing the data. Even after I tried to iterate on its mistake, it couldn't really fix it.

Summary

You should be pretty clear on what the results are here. 😮💨 Claude 3.7 won by a huge margin, and hey, again I'm going to say that this comparison is not fair on GPT-4.5 as it is not trained to be good at coding. But at least it got the first two problems working, even though it was not perfect.

When to use GPT-4.5 model?

Now that we have a general understanding of this model's abilities, let's take a look at situations where you'd want to prefer this model over anything else. 🤔

All in all, GPT-4.5 is not a model you can rely on for reasoning tasks. GPT-4.5 has a better understanding of what humans mean and can interpret subtle cues. It's designed to be better at conversations, design, and writing, adding that bit of human touch.

When you need a use case where you're super specific about writing or designing, then this model is the ideal choice.

So, does it justify the pricing? If I had to say, definitely not. But it's up to you to decide whether you think it's worth your money. 🤷♂️

For anything else, it doesn't quite justify the pricing and may not be the best choice.

Conclusion

The result's pretty clear, and not to say, this is not a fair comparison. It's like we compared an experienced developer with someone who's not even a coder. 🥴

But hey, the comparison is done to see how comparable GPT-4.5 is to Claude 3.7 Sonnet when it comes to coding.

Not just this comparison, but in all comparisons I've done, needless to say, even though we're using no-thinking Claude 3.7 Sonnet, it's just better and the only model you need for now when it comes to coding. 🔥

What do you think of this comparison? If you want me to compare some other models against each other, do let me know in the comments! 👇

Great comparison. @shricodev 🚀