Last weekend we had a chance to fine-tune the performance of a website that we started over a year ago.

It is a job board for Software Developers who are looking for work opportunities in Switzerland. Performance of SwissDevJobs.ch matters for 2 reasons::

Good user experience - which means both time to load (becoming interactive), and feeling of snappiness while using the website.

SEO - our traffic relies heavily on Google Search and, you probably know, that Google favors websites with good performance (they even introduced the speed report in Search Console).

If you search for "website performance basics" you will get many actionable points, like:

- Use a CDN (Content Delivery Network) for static assets with a reasonable cache time

- Optimize image size and format

- Use GZIP or Brotli compression

- Reduce the size of non-critical JS and CSS-code

We implemented most of those low-hanging fruits.

Additionally, as our main page is basically a filterable list (written in React) we introduced react-window to render only 10 list items at a time, instead of 250.

All of this helped us to improve the performance heavily but looking at the speed reports it felt like we can do better.

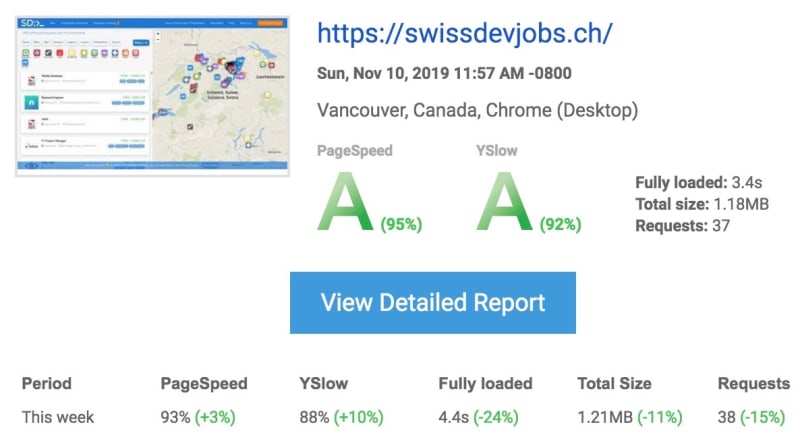

So we started digging into the more unusual ways in which we can make it faster and... we have been quite successful! Here is report from this week:

This report shows that the time of full load decreased by 24%!

What did we do to achieve it?

-

Use rel="preload" for the JSON data

This simple line in the index.html file indicates to the browser that it should fetch it before it's actually requested by an AJAX/fetch call from JavaScript.

When it comes to the point when the data is needed, it will be read from the browser cache instead of fetching again. It helped us to shave of ~0,5s of loading time

We wanted to implement this one earlier but there used to be some problems in the Chrome browser that caused double download. Now it seems to work.

-

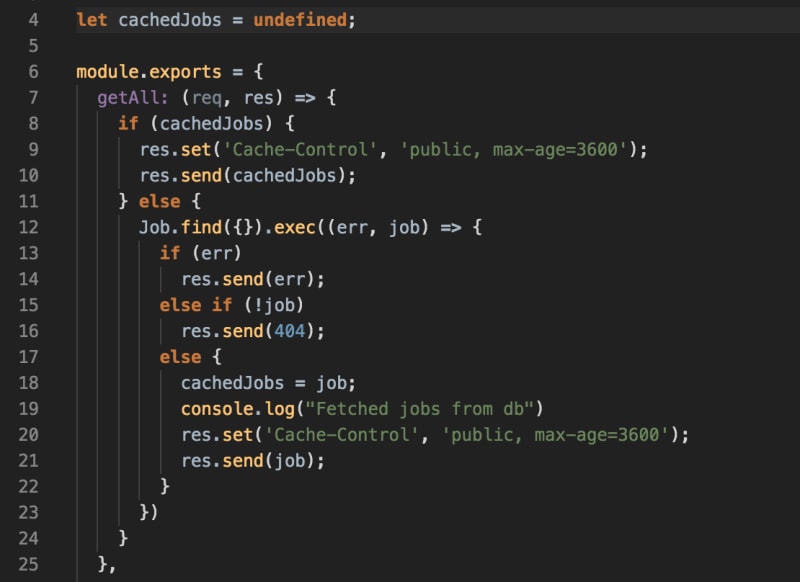

Implement super simple cache on the server side

After implementing JSON preloading we found that downloading the job list is still the bottleneck (it takes around 0,8s to get the response from the server). Therefore, we decided to look into server-side cache. Therefore, we decided to look into server-side cache. First, we tried node-cache but, surprisingly, it did not improve the fetch time.

It is worth to mention that the /api/jobs endpoint is a simple getAll endpoint so there is little room for improvement.

However, we decided to go deeper and built our own simple cache with... a single JS variable. It looks the following:

The only thing not visible here is the POST /jobs endpoint which deletes the cache (cachedJobs = undefined)

As simple as it is! Another 0,4s of load time off!

-

The last thing we looked at is the size of CSS and JS bundles that we load. We noticed that the "font-awesome" bundle weights over 70kb.

At the same time, we used only about 20% of the icons.

How did we approach it? We used icomoon.io to select the icons we used and created our own self-hosted lean icon package.

50kb saved!

Those 3 unusual changes helped us to speed up the website's loading time by 24%. Or, as some other reports show, by 43% (to 1,2s).

We are quite happy with these changes. However, we belive that we can do better than that!

If you have your own, unusual techniques that could help - we would be grateful for sharing them in the comments!

Is the preload method above good for service worker and main js file?