Regression testing is critical to ensure that new code updates did not negatively affect existing functionalities. However, not all QA teams are managing regression testing the right way.

In this article, we list the top 10 regression testing best practices to help you achieve better efficiency:

1. Integrate Regression Tests into CI/CD Early

Run regression tests automatically at the pull request or pre-merge stage. This ensures that regressions are caught before they reach staging or production. The earlier defects are found, the lower the cost.

Use CI tools like GitHub Actions, GitLab CI, Jenkins, or Azure DevOps to integrate test execution into your pipeline. Fail builds on critical test failures and generate clear, actionable feedback for developers.

2. Isolate the System Under Test (SUT)

Testing in a shared or unstable environment leads to inconsistent results and hard-to-reproduce bugs. Isolating the system under test and mocking dependencies helps you reduce test flakiness and increase confidence that failures are related to your code, not external factors.

Here are some tips:

Use Docker or WebApplicationFactory to spin up isolated test environments

Mock third-party APIs, external services, databases, and authentication layers

Ensure the environment mirrors production configs (e.g., headers, timeouts)

Run tests in parallel to simulate concurrent usage without side effects

3. Focus Your Regression Testing Based on Risk

Testing every feature is time-consuming and not always necessary. Instead, focus on the areas most likely to break or have the biggest impact if they fail. This includes features with complex logic, areas that change often, or parts that are critical to users.

Identify high-risk modules using past bugs and system complexity

Review recent commits to see what changed and where

Involve devs and product owners to help define risk areas

Allocate more regression coverage to risky zones

4. Rotate Your Test Coverage

Running every test in every release wastes time. Instead, keep a full library of test cases and rotate through them across releases. Run the most important tests every time and cycle through the others regularly to maintain broad coverage.

Tag test cases by priority and frequency (e.g., run always, run every 2 releases)

Create test plans based on tags and rotate them over sprints

Review rotation strategy during sprint planning or retrospectives

For example, in Katalon TestOps, you can assign tags to test cases for better management, which can later be filtered to fit your test execution needs.

5. Prioritize Tests Around New Features

New features are where things often break. Focus regression testing around what was just built or updated and anything connected to it. This helps you catch issues early and reduce test bloat.

Review feature requirements and implementation areas

Map which existing components are affected by new changes

Update regression plans to include only relevant test cases

6. Use Metrics to Decide When to Stop

Testing endlessly without knowing when to stop is inefficient. Instead, use bug discovery rates and test pass trends to decide when you're done. If you’re finding fewer bugs and everything’s stable, it may be time to wrap up.

Track bug rates per test cycle

Set thresholds for bug counts or failure rates to guide test exit

Monitor time-to-detect metrics and test result trends

A test report should include the following sections:

- *Project information: * In this part you should briefly describe the testing project and its objectives. Explain the purpose of the report if needed.

- *Test summary: * This section is essentially an abstract where you provide a quick summary of the key findings from the test. Most common metrics to be reported here are the number of test cases executed, passed or failed tests, and any notable bugs found.

- *Test result: * This section is an expansion of the former, where you go into greater detail regarding each bug. You list the test case ID, what the test case is about, and what bug was found. You also include screenshots or detailed descriptions of the sequence of events triggering the bug if needed.

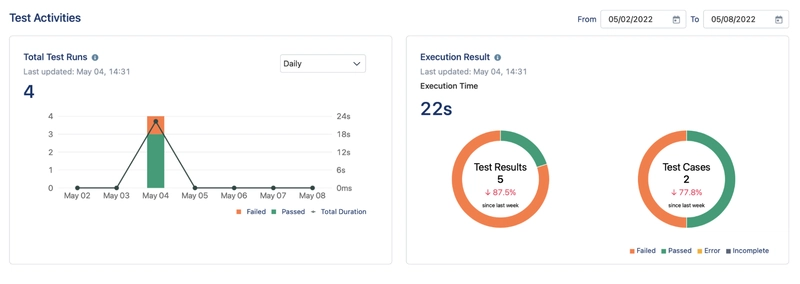

This section typically includes some visualizations in the form of charts and diagrams to better communicate with nontechnical stakeholders. The pie charts below demonstrate the percentage of passed/failed test cases in a test run in Katalon TestOps.

7. Analyze Bug Reports to Improve Coverage

Past bugs can show you where your tests are weak. Look at production issues or support tickets to find patterns, and add more regression coverage in those spots.

Review support tickets and production incident logs regularly

Identify frequently failing modules or common error types

Add test cases for the root causes of past incidents

8. Use Unit Tests and Code Coverage to Guide Effort

Unit tests catch issues early and cheaply. Use code coverage tools to find untested paths, and focus regression testing on areas with low coverage. This gives you better quality without redundant testing.

Check coverage reports with tools like JaCoCo, Coverlet, or Istanbul

Fill in gaps by writing new tests or expanding regression suites

Collaborate with devs to enforce and monitor coverage targets

9. Start Small With Automation and Grow

If you don’t have automated regression tests yet, don’t try to do everything at once. Start with the most critical workflows and build up over time. This reduces the pressure and makes your test suite easier to scale.

Start with login, checkout, or other high-traffic flows

Build a reusable framework from the beginning

Add one new automated test per sprint or story

10. Mix Manual and Automated Regression Tests

Not everything needs to be automated immediately. Use manual tests for exploratory checks or less stable features, and automate the rest. This gives you better coverage with less effort.

Define which tests should be manual vs automated

Create a lean but high-value manual regression checklist

Gradually replace manual cases with automation

📚 *Resources: * How To Calculate The Tangible & Intangible Value of Test Automation?